A Dark Day For Educational Measurement In The Sunshine State

Just this week, Florida announced its new district grading system. These systems have been popping up all over the nation, and given the fact that designing one is a requirement of states applying for No Child Left Behind waivers, we are sure to see more.

I acknowledge that the designers of these schemes have the difficult job of balancing accessibility and accuracy. Moreover, the latter requirement – accuracy – cannot be directly tested, since we cannot know “true” school quality. As a result, to whatever degree it can be partially approximated using test scores, disagreements over what specific measures to include and how to include them are inevitable (see these brief analyses of Ohio and California).

As I’ve discussed before, there are two general types of test-based measures that typically comprise these systems: absolute performance and growth. Each has its strengths and weaknesses. Florida’s attempt to balance these components is a near total failure, and it shows in the results.

Absolute performance tells you how high or low students scored – for example, it might consist of proficiency rates or average scores. These measures are very stable between years – aggregate district performance indicators don’t tend to change very much over short periods of time. But they are also highly correlated with student characteristics, such as income. In other words, they are measures of student performance, but tell you very little about the quality of instruction going on in a school or district.

Growth measures, on the other hand, are the exact opposite. States usually use “value-added” scores or, more commonly, changes in proficiency rates for this component. They can be extremely unstable between years (as in Ohio), but, in the case of well-done value-added and other growth model estimates (good models using multiple years of data at the school-level), can actually tell you something about whether the school had some effect on student testing performance (this really isn’t the case for raw changes in proficiency, as discussed here).

So, there’s a trade-off here. I would argue that growth-based measures are the only ones that, if they’re designed and interpreted correctly, actually measure the performance of the school or district in any meaningful way, but most states that have released these grading systems seem to include an absolute performance component. I suppose their rationale is that districts/schools with high scores are “high-performing” in a sense, and that parents want to know this information. That’s fair enough, I suppose, but it’s important to reiterate that schools’ average scores or proficiency rates, especially if they’re not adjusted to account for student characteristics and other factors, tell you little about the effectiveness of a school or district.

In any case, Florida’s grading system is half absolute performance and half growth: 50 percent is based on a district’s proficiency rate (level 3 or higher) in math, reading, writing and science (12.5 percent each); 25 percent is based on the percent of all students "making gains” in math and reading (12.5 percent each); and, finally, 25 percent is based on the percent of students "making gains" who are in the “bottom 25 percent” (or the lowest 30 students) in each category in math and reading.

Put differently, there are eight measures here – four based on absolute performance (proficiency rates in each of four subjects), and the other four (seemingly) growth-based (students “making gains” in math in reading, and the percent of the lowest-scoring 25 percent doing so in both subjects). Each of the eight measures is simply the percentage of students meeting a certain benchmark (e.g., proficient or “making gains”). Districts therefore receive between zero and 800 points, and letter grades (A-F) are assigned based on these final point totals (I could not find a description of how the thresholds for each grade were determined).*

Now, here’s the amazing thing. Two of Florida’s four “growth measures” – the “percent of all students making gains” in math and reading – are essentially absolute performance measures. How is this possible? Well, students are labeled as “making gains” if they meet one of three criteria. First, they can move up a category – for example, from “basic” to “proficient." Second, they can remain in the same category between years but increase their actual score by more than one year’s growth (i.e., increase a minimum number of points, which varies by grade and subject).

Third – and here’s the catch – students who are proficient (level 3) or better in year one, and remain proficient or better in year two without decreasing a level (i.e., from “advanced” to “proficient”) are coded as “making gains." In other words, any student who is “proficient” or “advanced” in the first year and remains in that category (or moves up) the second year counts as having “made gains," even if their actual scores decrease or remain flat.

This is essentially double counting. In any given year, the only way a student can be counted as proficient but not “making gains” is if that student scores “advanced” in year one but only “proficient” in year two. This happens, but it’s pretty rare. One way to illustrate how rare is to see how much difference there is between districts' proficiency rates and the percent of students "making" gains, using using data from Florida’s Department of Education.

The scatterplot below presents the relationship between these two measures (each dot is a district).

The correlation is almost 0.8, which is very high, and the average difference between the two scores is plus or minus 6.5 percentage points. And the correlation is even higher for reading (0.862).

So, 50 percent of district’s final score is based on their proficiency rates. 25 percent is based on the percent of all students “making gains." They are, for the most part, roughly the same measure. Three-quarters of districts’ scores, then, are basically absolute performance measures.

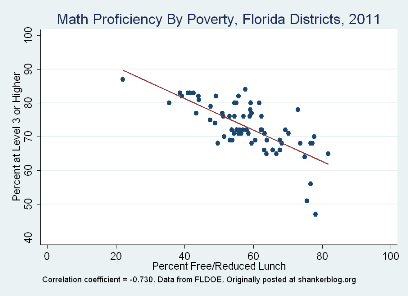

And, of course, as mentioned above, they tell you more about the students in that district than about the effectiveness of the schools (at least as measured by test scores). Income is not a perfect proxy for student background (and lunch program eligibility isn't a great income measure), but let's take a look at the the association between proficiency rates in math and district-level poverty (as measured by the percent of students eligible for free/reduced lunch).

Here’s another strong, tight relationship. Districts with high math proficiency rates tend to be those with low poverty rates, and vice-versa. Once again, the relationship between poverty and reading proficiency (not shown) is even stronger (-0.817), and the same goes for science (-0.792). The correlation is moderate but still significant in the case of writing (-0.565).

As expected, given the similarity of the measures, there is also a discernible relationship between poverty and the percent of students “making gains” in math, as in the graph below.

The slope is not as steep, but the dots are more tightly clustered around the red line (which reflects the average relationship between the two variables). The overall correlation is not quite as strong as for math proficiency, but it’s nevertheless a substantial relationship (and, again, higher in reading). Since most students in more affluent districts are proficient, and since they don’t often move down a category, the “gains” here are really just proficiency rates with a slight twist.

The only measure included in Florida’s system that is independent of student characteristics such as poverty (and of other measures) is the percent of “bottom 25 percent” of students “making gains." I will simplify the details a bit, but essentially, the state calculates the score in math and reading that is equivalent to the 25th percentile (i.e., one quarter of students scored below this cutoff point). Among the students scoring below this cutoff point, those who either made a year’s worth of progress or moved up a category are counted as “making gains."

This measure (worth 25 percent of a district’s final score) is at least not an proxy for student background, and the state deserves credit for focusing on its lowest-performing students (a feature I haven’t seen in other systems). But it is still a terrible gauge of school effectiveness. Among other problems (see here), it ignores the fact that changes in raw testing performance are due largely to either random error or factors outside of schools’ control. In addition, students who move up a category are coded as "making gains," even if their actual progress is minimal.

Let’s bring this all home by looking at the relationship between districts’ final grade scores (total points), which are calculated as the sum of all these measures, and poverty.

There you have it – a very strong relationship by any standard. Very few higher-poverty districts got high scores, and vice-versa. District-level free/reduced lunch rates explain over 60 percent of the variation in final scores.

In fact, among the 30 districts (out of about 70 total) that scored high enough to receive a grade of “A” this year (525 total points or more), only one had a poverty rate in the highest-poverty quartile (top 25 percent). Among the 17 districts in this highest-poverty quartile, 13 received grades of "C" or worse (only one other district in the whole state got a "C" or worse, and it was in the second highest poverty quartile). Conversely, only two districts with a poverty rate in the lowest 25 percent got less than an “A” (both received “B’s”).

Any state that uses unadjusted absolute performance indicators in its grading system will end up with ratings that are biased in favor of higher-income schools and districts. The extent of this bias will depend on how heavily the absolute metrics are weighted, as well as on how the growth-based measures pan out (remember that growth-based measures, especially if they’re poorly constructed, can also be biased by student characteristics).

Florida’s system is unique in that it portrays absolute performance as growth, and in doing so, takes this bias to a level at which the grades barely transmit any meaningful educational information.

In other words, Florida’s district grades are largely a function of student characteristics, and have little to do with the quality of instruction going on in those districts. Districts serving disadvantaged students will have a tougher time receiving good grades even if they make large actual gains, while affluent districts are almost guaranteed a top grade due to nothing more than the students they serve.

- Matt Di Carlo

*****

* It’s worth pointing out that, while these district-level grades are based entirely on the results of Florida’s state tests, there is also a school-level grading system. It appears to be the same as the district scheme for elementary and middle schools (800 points, based on state tests), but for high schools, there is an additional 800 points based on several alternative measures, including graduation rates and participation in advanced coursework (e.g., AP classes). See here for more details.

Really well done. But you'll also notice that Florida's FCAT evaluation system is designed to avoid comparing and assessing performance between higher performing/wealthier schools. The standard of failure/excellence is reaching the middling Level 3 level on the test. But there are five levels, two of which are higher performing than three. But they don't factor into evaluation. Schools are not measured by what they actually score. Only how many kids get to Level 3. One of many ways this system is simply rigged. You should say it directly.

You can read about this from the ground in Florida here.

http://www.lakelandlocal.com/2012/01/there-are-5-fcat-levels-not-3-so-s…

Mr. DiCarlo- I have written a

Mr. DiCarlo- I have written a response to this post. I hope you will give it some consideration: http://jaypgreene.com/2012/02/08/the-dark-days-of-educational-measureme…

Superintendent Klein from New York used a far better approach -- New York City measured schools with the same socioeconomic base against each other. This allowed a school in a poor area to earn an A, if they were among the best in class.

This would upset the apple cart for schools getting As because they have the right kids, but it would be a far fairer measurement system

Hi Mark,

See here: http://shankerblog.org/?p=5785

Thanks for the comment,

MD