The Superintendent Factor

One of the more visible manifestations of what I have called “informal test-based accountability” -- that is, how testing results play out in the media and public discourse -- is the phenomenon of superintendents, particularly big city superintendents, making their reputations based on the results during their administrations.

In general, big city superintendents are expected to promise large testing increases, and their success or failure is to no small extent judged on whether those promises are fulfilled. Several superintendents almost seem to have built entire careers on a few (misinterpreted) points in proficiency rates or NAEP scale scores. This particular phenomenon, in my view, is rather curious. For one thing, any district leader will tell you that many of their core duties, such as improving administrative efficiency, communicating with parents and the community, strengthening districts' financial situation, etc., might have little or no impact on short-term testing gains. In addition, even those policies that do have such an impact often take many years to show up in aggregate results.

In short, judging superintendents based largely on the testing results during their tenures seems misguided. A recent report issued by the Brown Center at Brookings, and written by Matt Chingos, Grover Whitehurst and Katharine Lindquist, adds a little bit of empirical insight to this viewpoint.

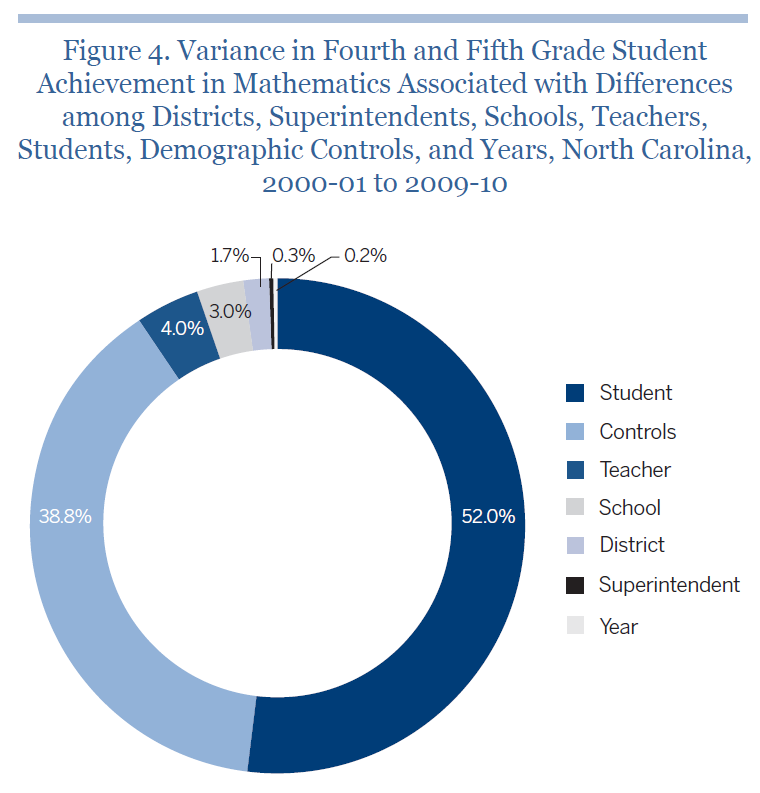

The authors look at several outcomes, including superintendent tenure (usually quite short), and I would, as usual, encourage you to read the entire report. But one of the main analyses is what is called a variance decomposition. Variance decompositions, put simply, partition the variation in a given outcome (in this case, math test scores among fourth and fifth graders in North Carolina between 2001 and 2010) into portions that can be (cautiously) attributed to various factors. For example, one might examine how much variation is statistically "explained" by students, schools, districts and teachers.

Chingos et al. include a “level” in their decomposition that is virtually never part of these exercises – the superintendent in office. A summary of the results is presented in the graph below, which is taken directly from the report.

As you can see, most of the variation in testing outcomes (52 percent) is found between students (this is unmeasured, unobserved variation, including measurement error). An additional 38.8 percent is “explained” by the control variables they include in the model, such as subsidized lunch eligibility and race/ethnicity. A student’s teacher and school account for four and three percent, respectively. And, finally, a rather tiny portion of the variation in math test scores is associated with which individual sits in the superintendent’s office – about 0.3 percent, or three tenths of one percent (the results were similar in reading).

Variance decompositions are useful (I would even say fun), but they of course require cautious interpretation (and the authors lay out these issues). For one thing, this is just one decomposition, using data from one state. In addition, the factors in the graph above are not entirely independent – for example, part of the job of a superintendent is to ensure that schools and districts function well and help attract and retain good teachers. And, finally, the 0.3 percent finding certainly does not imply that some superintendents do not have a larger measured impact on testing outcomes (though imprecise measurement makes it difficult to identify them).

That said, this report speaks directly to the aforementioned “informal accountability” dynamic, in which superintendents, particularly those in large urban districts, are expected to "deliver" large aggregate testing increases during their terms. This analysis would seem to suggest, albeit tentatively, that these expectations are misplaced. The job of a school superintendent is extraordinarily difficult and important, but it may not exert anything resembling the kind of influence on short-term testing outcomes that is often assumed in our public discourse.

So, perhaps it is time that we stop allowing superintendents to build reputations based on (often misinterpreted) testing data during their terms, and stop judging them based so heavily on this criterion. The job is hard enough as is.

- Matt Di Carlo