Through The Sunshine State's Accountability Systems, Darkly

Some Florida officials are still having trouble understanding why they're finding no relationship between the grades schools receive and the evaluation ratings of teachers in those schools. For his part, new Florida education Commissioner Tony Bennett is also concerned. According to the article linked above, he acknowledges (to his credit) that the two measures are different, but is also considering "revis[ing] the models to get some fidelity between the two rankings."

This may be turning into a potentially risky situation. As discussed in a recent post, it is important to examine the results of the new teacher evaluations, but there is no reason one would expect to find a strong relationship between these ratings and the school grades, as they are in large part measuring different things (and imprecisely at that). The school grades are mostly (but not entirely) driven by how highly students score, whereas teacher evaluations are, to the degree possible, designed to be independent of these absolute performance levels. Florida cannot validate one system using the other.

However, as also mentioned in that post, this is not to say that there should be no relationship at all. For example, both systems include growth-oriented measures (albeit using very different approaches). In addition, schools with lower average performance levels sometimes have trouble recruiting and retaining good teachers. Due to these and other factors, the reasonable expectation is to find some association overall, just not one that's extremely strong. And that's basically what one finds, even using the same set of results upon which the claims that there is no relationship are based.

One quick but important note before discussing these results: There are still a lot of Florida teachers - eight percent as of January - who have not yet received an evaluation rating. This might affect the final distributions, and it's certainly worth bearing in mind when reviewing them.

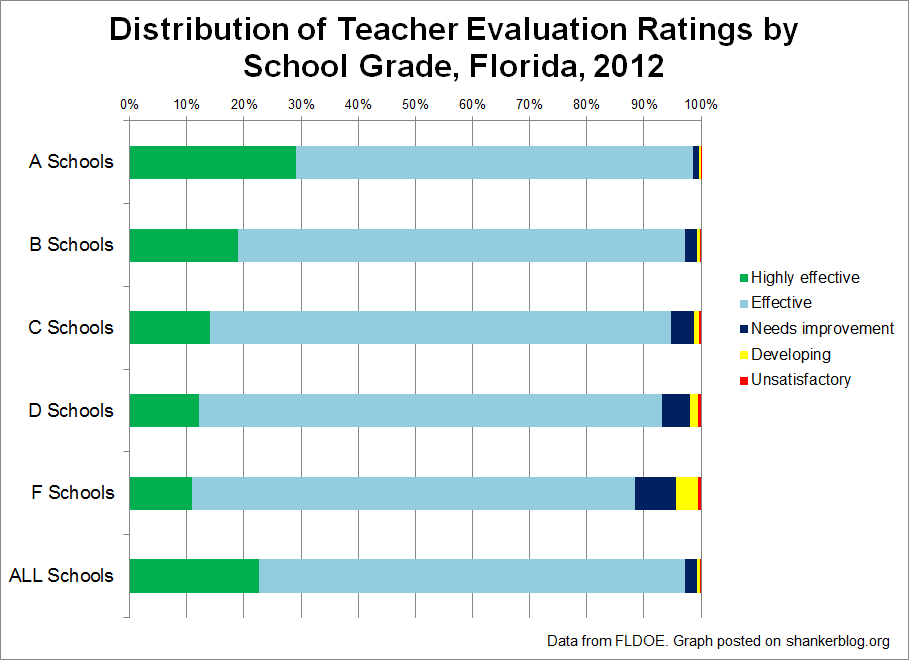

Moving on, the data suggest that there actually is a relationship between school grades and teacher evaluation ratings. The graph below presents the distribution of ratings by school grade (not including unrated teachers).

You can see the percentages of teachers rated “highly effective” (the green sections of the bars) increase as school grades get higher. For instance, almost 30 percent of teachers in A-rated schools receive the highest rating, compared with just over 10 percent among their counterparts teaching in F-rated school. Conversely, the total proportion of teachers rated “needs improvement” or lower decreases as grades get higher.

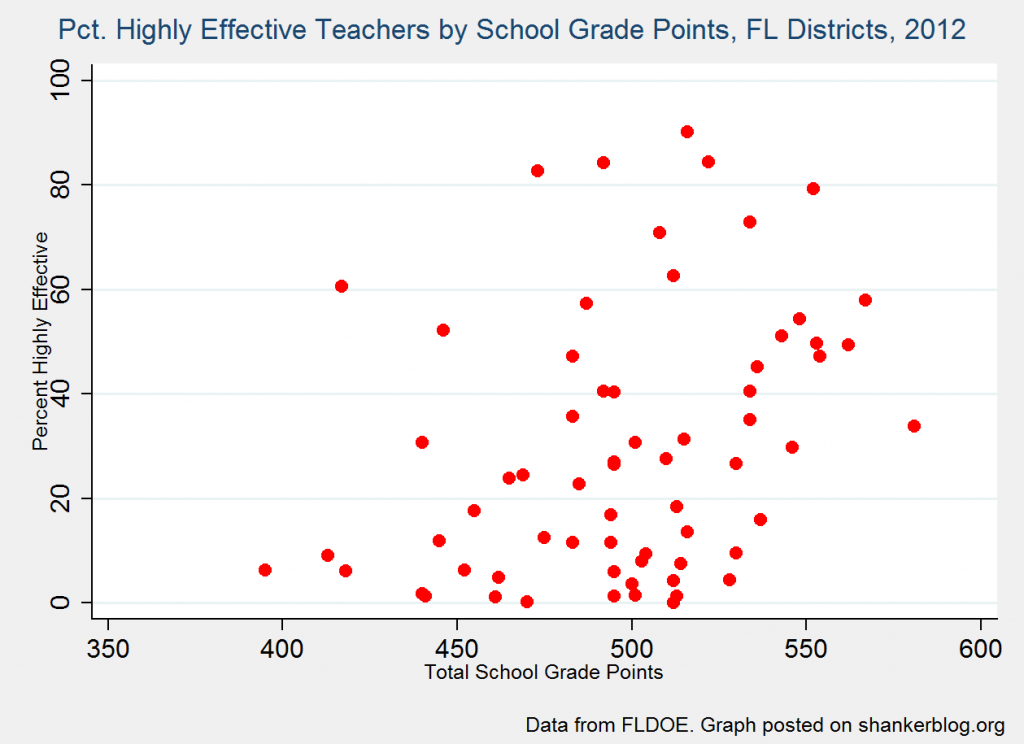

You can also also see this modest association in the scatterplot below, which presents the percentage of teachers in each district receiving “highly effective” ratings by the number of points that district received on the state’s grading system (the state sorts these point totals into A-F grades).

It’s a very noisy relationship, but you can see a slight tendency - schools receiving more points also exhibit higher percentages of "highly effective" evaluation ratings. (And the same basic conclusion applies when looking at the percentages of teachers receiving the lower ratings, which decrease as school point totals increase.)

In other words, there actually is an association, just not a strong one. This doesn't really carry any concrete policy implications, but it's noteworthy given all the consternation.

More importantly, there's plenty of room for reasonable disagreements about the early teacher evaluation results, which, along with a variety of other quantitative and qualitative information, should inform the crucial process of making early adjustments to the system. In Florida, however, these efforts have been sidetracked by the false premise that teacher and school ratings should "match."

The source of much of this confusion is the fact that Florida's school grades, even after more than 10 years, are still commonly portrayed and viewed, even by high-level officials, as measures of school performance (e.g., their influence on student progress) rather than student performance (e.g., how highly students score, which doesn't account for the fact that students enter schools at different levels).

The grades measure both, but it's actually the latter more than the former.*

There is a reason why over 97 percent of Florida's lowest-poverty schools receive A or B grades, and virtually every one of the schools receiving a D or F have poverty rates above the median. It's because schools are judged largely by absolute performance, and students from higher-income families tend to score higher on tests.

This is the opposite of how teacher evaluations are supposed to work, as is evident in Florida's aggressive efforts to point out that the results of their value-added model, unlike their school grades, are not associated with student characteristics such as poverty.

In other words, the fact is that Florida, and U.S. accountability systems in general, judge schools' test-based performance in large part using a standard - how highly students score - that is widely (and correctly) acknowledged as something that should be controlled, whether directly (e.g., in value-added models) or indirectly (e.g., classroom observation protocols), when assessing teacher performance.

Obviously, this is not an inherently "bad" thing - there is a legitimate role for both growth and absolute performance in school-level accountability systems (though the roles, in many cases, are not properly delineated).

However, we need to stop conflating these different types of measures, or we will continue to confront risky situations such as that currently unfolding in Florida, where officials are thinking about imposing "fidelity" on two systems that, as currently designed, are really not meant to be together.

* There are a few reasons why this is the case in Florida (and it's also the case, to varying degrees, in virtually every other state as well). First, straight proficiency rates count (nominally) for half of a school's grade. Second, another 25 percent is based on a "growth" measures that is highly redundant with proficiency. Third and finally, Florida's system pays no attention to the comparability of their measures, and there is far greater variation in the absolute performance components compared with the growth measures. This means that the former play a larger role in determining the variation in final scores than their nominal weights suggest.

I wonder how results would change if you controlled for the distribution of teachers within a school. E.g., look at the size of teacher's classes as a weight on their impact on the school's overall performance. Don't know what's available in the data, but that would be interesting.

I think what Mr. Dicarlo fails to understand is that having different methods that both have high stakes attached to them is ludicrous at best. District researchers across the state have told their boards why the methods yield different results, yet it's impossible to defend that the results should differ. Having a school go from a "C" to an "A", receive state funds for that "A" grade, and then have VAM scores that suggest you have 10 Unsatisfactory teachers removes credibility from the ENTIRE system.