The Sensitive Task Of Sorting Value-Added Scores

The New Teacher Project’s (TNTP) recent report on teacher retention, called “The Irreplaceables," garnered quite a bit of media attention. In a discussion of this report, I argued, among other things, that the label “irreplaceable” is a highly exaggerated way of describing their definitions, which, by the way, varied between the five districts included in the analysis. In general, TNTP's definitions are better-described as “probably above average in at least one subject" (and this distinction matters for how one interprets the results).

I’d like to elaborate a bit on this issue – that is, how to categorize teachers’ growth model estimates, which one might do, for example, when incorporating them into a final evaluation score. This choice, which receives virtually no discussion in TNTP’s report, is always a judgment call to some degree, but it’s an important one for accountability policies. Many states and districts are drawing those very lines between teachers (and schools), and attaching consequences and rewards to the outcomes.

Let's take a very quick look, using the publicly-released 2010 “teacher data reports” from New York City (there are details about the data in the first footnote*). Keep in mind that these are just value-added estimates, and are thus, at best, incomplete measures of the performance of teachers (however, importantly, the discussion below is not specific to growth models; it can apply to many different types of performance measures).

Just to keep this simple, I will lay out four definitions of a “high performing” NYC teacher (or, more accurately, teachers with relatively high value-added scores). I’m not a huge fan of percentile ranks, but I will use them here, for reasons related to data availability (see the first footnote).

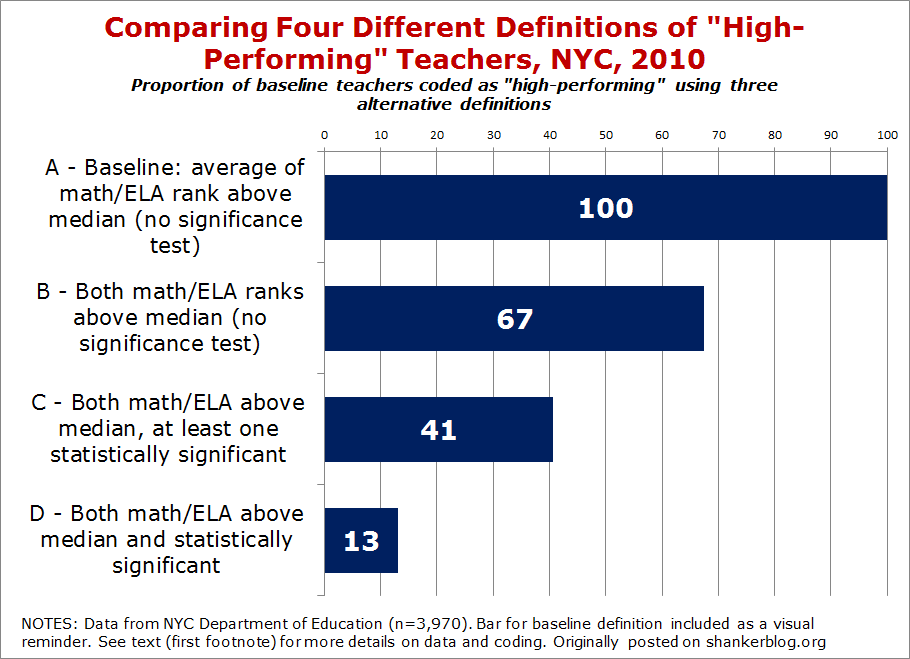

Here are the four definitions (they are, in a sense, in ascending order of "toughness"):

- Definition A – This is the most inclusive, "baseline" definition. Teachers must simply have an average percentile rank (the mean of math and ELA) greater than or equal to the median (averaging ranks is bad practice, but it’s fine for our illustrative purposes here). Predictably, about half (48 percent) of teachers make the cut.

- Definition B - Teachers must receive ranks greater than or equal to the median in both math and reading. Unlike in Definition A, a high rank in one subject cannot "compensate" for a low rank in the other.

- Definition C – This is TNTP’s “District D” definition. Both ranks must be at or above the median, and at least one must be statistically significant.

- Definition D – This final definition is the “toughest." Teachers must have ranks that are (statistically) significantly greater than or equal to the median in both math and ELA.

You may also notice that these definitions are “nested” – that is, any teacher who makes the cut for a given definition also makes it for all the “lower” definitions. For example, every teacher who is identified by Definition C also makes it under Definition A and B. This nesting gives us a nice easy way to compare the different definitions, as in the graph below, which tells you the percentage of teachers who make it under Definition A that also make it under Definitions B, C and D.

The first bar – the “baseline” group meeting Definition A - is superfluous (it’s just a visual reminder that the other bars all apply to the teachers - roughly half of the sample - who make the first definition).

You can see that each definition narrows the original group considerably. About two-thirds of our baseline teachers meet Definition B, roughly two in five meet Definition C and 13 percent meet Definition D.

It’s not surprising that “tougher” criteria identify fewer teachers, but it does demonstrate how even seemingly slight variations (e.g., Definition A and B) can generate substantial shifts in the results. States and districts, whether they are choosing these definitions or analyzing the first rounds of results, should be checking for this, especially if they're attaching consequences to the ratings. And we should always be careful about taking ratings at face value.

It also illustrates how much of a difference accounting for error margins can make when dealing with noisy estimates. For instance, compare Definitions B and D (the second and fourth bars in the graph). Both require that teachers be ranked at or above the median in both subjects. The only difference between them is that D says the estimates must be above the median and statistically significant. This requirement decreases the number of teachers identified by about 80 percent. In fact, only about seven percent of all teachers in the NYC sample meet the Definition D requirements.

Of course, one cannot say which, if any, of these definitions is “correct” by some absolute standard (though I personally favor some use of error margins, even at relaxed confidence levels, and, again, I wouldn't call any of them "irreplaceable"). The point, rather, is that it matters where and how you set the bar. This may sound obvious, but there are hundreds of districts currently making these somewhat arbitrary decisions, and one can only hope that they realize their ramifications.

- Matt Di Carlo

*****

* In order to best approximate how states and districts are approaching their new evaluation systems, I use the single-year value-added estimates. In addition, since the NYC TDR data only include error margins for the single-year scores in the form of upper- and lower-bound percentile ranks, rather than point estimates, I use the former for this analysis. Unfortunately, the city assigned percentile ranks by experience category (one year, two years, three years, three or more years, co-teaching and unknown). Therefore, I limit the sample to teachers with more than three years of experience (the results for the other experience categories are similar). Finally, the analysis is also limited to teachers with non-missing 2010 estimates in both math and ELA (it’s not clear whether TNTP imposed this restriction, but it seems sensible). The final sample size is 3,970.