Is California's "API Growth" A Good Measure Of School Performance?

California calls its “Academic Performance Index” (API) the “cornerstone” of its accountability system. The API is calculated as a weighted average of the proportions of students meeting proficiency and other cutoffs on the state exams.

It is a high-stakes measure. “Growth” in schools’ API scores determines whether they meet federal AYP requirements, and it is also important in the state’s own accountability regime. In addition, toward the middle of last month, the California Charter Schools Association called for the closing of ten charter schools based in part on their (three-year) API “growth” rates.

Putting aside the question of whether the API is a valid measure of student performance in any given year, using year-to-year changes in API scores in high-stakes decisions is highly problematic. The API is cross-sectional measure – it doesn’t follow students over time – and so one must assume that year-to-year changes in a school’s index do not reflect a shift in demographics or other characteristics of the cohorts of students taking the tests. Moreover, even if the changes in API scores do in fact reflect “real” progress, they do not account for all the factors outside of schools’ control that might affect performance, such as funding and differences in students’ backgrounds (see here and here, or this Mathematica paper, for more on these issues).

Better data are needed to test these assumptions directly, but we might get some idea of whether changes in schools’ API are good measures of school performance by testing how stable they are over time.

Put simply, if changes in the API are good measures, we should expect schools’ “growth rates” to be somewhat consistent. For example, a school that picks up ten points between 2009 and 2010 should be expected to perform similarly between 2010 and 2011.

Needless to say, we can tolerate a lot of fluctuation here. Some schools, for whatever reason, will get wildly different results between years, while many others will vary modestly. The stability need not be perfect, or even extremely high, but most schools don’t change that much over short periods of time. So, on the whole, it is reasonable to expect at least moderate consistency of schools’ API “growth rates."

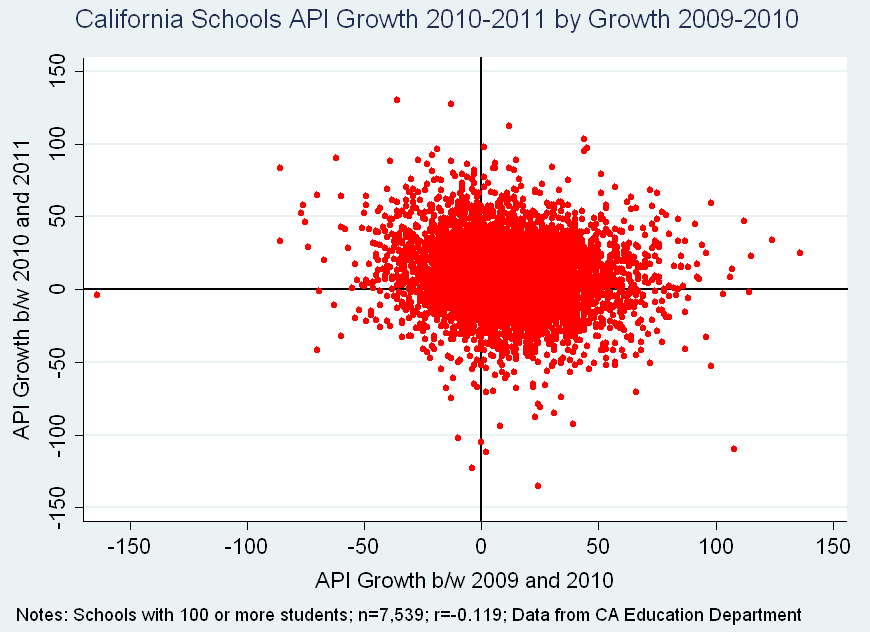

Using data from the California Department of Education, the graph below portrays stability in API “growth” over a three year period (i.e., two year-to-year changes). Each dot is a school. The vertical axis represents the change in school API (i.e., "API growth") between 2010 and 2011, while the horizontal axis is the change between 2009 and 2010. In order to mitigate some of the random error from small samples, schools with fewer than 100 test takers are excluded.

The scatterplot suggests that there is little discernible relationship between schools 2009-10 and 2010-2011 API changes (the correlation coefficient is actually negative - -.12).

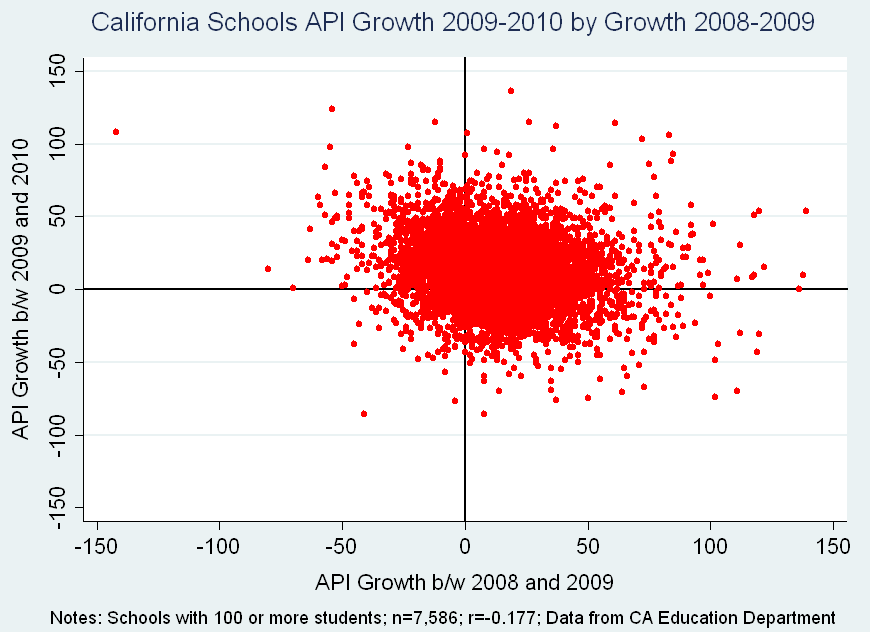

Just to make sure that the 2009-11 period (growth between 2009 and 2010 compared with that between 2010 and 2011) is not somehow anomalous, let’s go back one more year and present the same results for 2008-10.

The situation is no different – there is once again an essentially random relationship between API growth during the two transitions (2009-2010 and 2008-2009). Schools that showed strong "growth" in their API indexes in the first transition were almost as likely to be relatively flat or negative in the second transition as they were to repeat their performance.*

So, to whatever degree the stability of year-to-year changes in California schools’ API scores is indicative of this measure’s quality, it is a poor one indeed.

This instability should not surprise anyone, and you’ll find the same situation in other states using similar measures. Unadjusted year-to-year changes in cross-sectional testing results (especially proficiency-based measures) reflect several sources of critical bias, both random and systematic. It is inappropriate to use these data in high-stakes decisions.

In order to have a positive impact, “data-driven decisions” need high-quality data, interpreted properly. Year-to-year changes in California’s API are to some degree little more than coin tosses.

- Matt Di Carlo

******

* The correlation between the 2010-11 change and the average year-to-year change between 2007 and 2010 is no stronger – i.e., additional years of data don’t do a better job predicting “future” growth.

California Charter schools should all be managed by the Gulen Movement. They currently have over 120 charter schools in the USA. Perhaps you have heard about them in USA Today? or NY Times? Or their leader exiled Imam Fethullah Muhammed Gulen.

http://www.gulencharterschools.weebly.com