A Few Quick Fixes For School Accountability Systems

Our guest authors today are Morgan Polikoff and Andrew McEachin. Morgan is Assistant Professor in the Rossier School of Education at the University of Southern California. Andrew is an Institute of Education Science postdoctoral fellow at the University of Virginia.

In a previous post, we described some of the problems with the Senate's Harkin-Enzi plan for reauthorizing the No Child Left Behind Act, based on our own analyses, which yielded three main findings. First, selecting the bottom 5% of schools for intervention based on changes in California’s composite achievement index resulted in remarkably unstable rankings. Second, identifying the bottom 5% based on schools' lowest performing subgroup overwhelmingly targeted those serving larger numbers of special education students. Third and finally, we found evidence that middle and high schools were more likely to be identified than elementary schools, and smaller schools more likely than larger schools.

None of these findings was especially surprising (see here and here, for instance), and could easily have been anticipated. Thus, we argued that policymakers need to pay more attention to the vast (and rapidly expanding) literature on accountability system design.

In a follow-up analysis, we decided to apply the above approach to California’s accountability system in hopes of influencing ongoing discussions in Sacramento about how best to revise the state's decade-old Public Schools Accountability Act (for the full brief, see here). To that end, we applied some of the same descriptive methods to statewide, school-level data on California’s Academic Performance Index (API).

Similar to indices used by other states, the API is a composite measure of student achievement ranging between 200 and 1000.*

The goal is an API of 800. Schools with APIs below 800 can meet their annual targets if they make up at least 5% of the difference between their API score and 800—akin to the school-level growth-to-proficiency model used in NCLB’s Safe Harbor provisions. While only California uses these exact measures, many states have adopted API-like techniques in their new NCLB-waiver accountability systems.

We assessed the API (and year-to-year changes in API) as a measure of school performance, as well as a means of identifying low performing schools. We also put forth recommendations as to how the state might improve the index moving forward.

As expected, our analyses of the existing API showed many of the same problems identified throughout the literature and in our previous post. For instance, we found:

- API scores are as highly correlated with student demographics, as is NCLB's measure of Adequate Yearly Progress;

- Changes in API from year to year are remarkably unstable—in fact, the correlations are around -.2;

- API and changes in API are biased against middle and high schools;

- Changes in API are biased against small schools.

With these findings in mind, we made several suggestions for revising API. Our goal was to not only propose changes that could be implemented relatively easily, but also those that would markedly improve the identification of schools in need of intervention and/or additional support.

Our first suggestion was one that has been made repeatedly on this blog and in the literature: school-level changes in achievement levels are very poor measures of achievement growth, and they really should not be used for any important decisions. If the state wants to measure achievement growth in manner that is useful for educators, policy-makers, and other stake-holders, (and it should), it must use a student-level growth model. We did not go so far as to make a suggestion of the best growth model, but there are many to choose from (including models currently implemented by other states’ education systems). In short, the state would not need to reinvent the wheel here.

Second, if the state decides that student growth is an important measure of school performance, we recommend using multiple years of data to address some of the instability issues with growth measures (i.e., larger samples generate more precise estimates). Perhaps the most straightforward approach is to use a running average of two or three years in making determinations. And high stakes decisions about schools should not be made on the basis of one year of relatively noisy growth data.

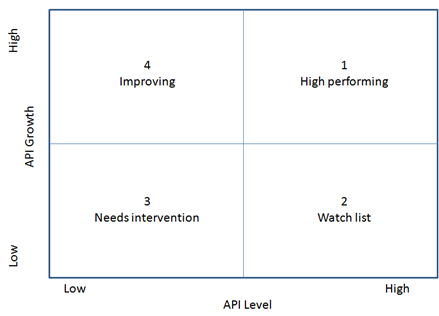

Third, rather than merely creating an arbitrarily-weighted composite of achievement levels and growth, we recommend examining level and growth together, and creating interventions to address schools of various profiles. At the crudest level, the state could use a figure like the one below, which merely separates schools into high and low levels (e.g., absolute API scores) and high and low growth.

Schools with low levels and high growth might not need much intervention at all (though these schools are largely identified as failing under both NCLB and PSAA). In contrast, schools with low levels and low growth are more likely to need additional support or intervention. For these schools, it could be useful to evaluate the policies and practices used by their high-growth (but similarly low-level) peers.

Fourth, if a policy is implemented where the bottom or top x% of schools are to be identified, we recommend making these calculations separately by school level and size. This is especially feasible in California, given the large number of schools in the state. So for instance, instead of selecting the bottom 5% of Title 1 schools, the state could first stratify schools by level and size (say, quartiles). Then the state could select the bottom 5% from each of the 12 size-level combinations (i.e., separate enrollment quartiles for elementary, middle, and high schools).

Finally, we wholeheartedly support the expansion of the key outcomes to be measured in the school evaluation system. If the maxim is "what you test is what you get," then basing 90% of a school's rating on ELA and mathematics will certainly result in the crowding out of other important content. At a minimum, we support giving greater weight to science and social studies tests than is currently the case (these subjects are already tested). The state could also add tests in other subjects, if desired.

Another option would be to measure some important "non-cognitive" skills, such as students' social/emotional adjustment or grit. For high schools, we strongly support measuring important post-graduation outcomes such as employment and college enrollment and success.

In any case, rather than bemoaning that the accountability system incentivizes schools to narrow the curriculum to math and reading, we hope California takes concrete steps to solve the problem. While our proposed solutions will not solve the problems of school accountability, they will go a long way toward fixing some of the most glaring problems in the use of the API to identify low-performing schools.

- Morgan Polikoff and Andrew McEachin

*****

* Specifically, each test taken in a school is given a point value: 200 for far below basic, 500 for below basic, 700 for basic, 875 for proficient, and 1000 for advanced. These are then averaged and weighted across subjects (Math and Reading account for approximately 85% of the weights) to arrive at a final composite API for each school

What's the ballpark year-to-year correlation of student-level growth scores for a typical school?

You would expect them to be between .2 and .5 depending on the model and sample size. That is quite different than the -.2 observed with school-level measures like the API.

As the state mandated standardized tests are basically used to rate schools and minimally used to address individual needs of students wouldn't it make more sense to conduct a random sampling of students instead of all students? It would save schools a significant amount of money and time.

Well there are a couple problems with that. First, there's a simple sample size issue - the estimates of school performance (particularly if based on growth) will be much less stable with a sampling method. Second, we're already down the road of using these tests for teachers as well, so that would make the sample size issues even more problematic. Third, if I had my druthers we'd have at least a modicum of student stakes on these tests as well (I don't know if I speak for either my co-author or Mr. DiCarlo on this point), so that would really make it impossible.

The last point I want to make is that I think the goal should be an assessment system that is indeed instructionally useful. Rather than design a system that precludes that possibility, I'd like to work toward that goal.