The FCAT Writing, On The Wall

The annual release of state testing data makes the news in every state, but Florida is one of those places where it is to some degree a national story.*

Well, it’s getting to be that time of year again. Last week, the state released its writing exam (FCAT 2.0 Writing) results for 2013 (as well as the math and reading results for third graders only). The Florida Department of Education (FLDOE) press release noted: “With significant gains in writing scores, Florida’s teachers and students continue to show that higher expectations and support at home and in the classroom enable every child to succeed.” This interpretation of the data was generally repeated without scrutiny in the press coverage of the results.

Putting aside the fact that the press release incorrectly calls the year-to-year changes “gains” (they are actually comparisons of two different groups of students; see here), the FLDOE's presentation of the FCAT Writing results, though common, is, at best, incomplete and, at worst, misleading. Moreover, the important issues in this case are applicable in all states, and unusually easy to illustrate using the simple data released to the public.

First, however, it's important to note that the administration of the FCAT Writing test changed in a manner that precludes straightforward comparison of the 2013 results to those from 2012. Prior to 2013, students were given 45 minutes to complete the “writing prompt," or the essay portion of the exam. Starting with the 2013 test, they were given 60 minutes. It's not possible to know how this affected the results.

Accordingly, the technical documentation for the FCAT notes (in bold-faced type) that “caution should be used when comparing 2013 FCAT 2.0 Writing data to FCAT writing data from previous years."

The FLDOE press release and “media packet” do mention the change in rules, but neither repeats the warning. Actually, the narratives of both documents do not seem to exercise much caution at all. Rather, they trumpet the “significant gains," and use them to argue for the success of their policies. This is, to put it mildly, questionable.

Let’s move on to the actual results. Students receive FCAT 2.0 Writing scores between 1-6, and there is no official “passing score” for the exam (i.e., one analogous to "proficient" on the math and reading assessments).

Florida still opts to present the results in terms of the percentage students above cut scores, with a primary focus on 3.5 as the benchmark, as this what's used for school grades - i.e., schools are judged based on the proportion of students scoring 3.5 or above.

However, the percentage scoring above 3.0 is also generally considered noteworthy, since it was the school grades benchmark score prior to last year. It is instructive to take a look at both.

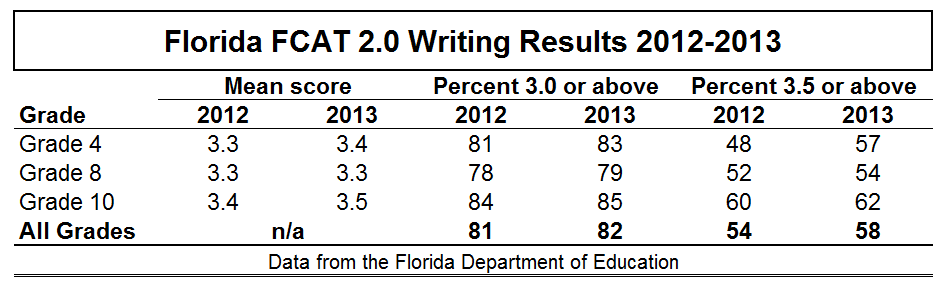

As you can see in the four rightmost columns of the simple table below, the percentage of students meeting the 3.5 threshold increased from 54 to 58 percent. This is the primary basis for the state's claim of "significant gains."

Now, if you look at the grade-level increases underlying the overall increase, you'll see that two of them (eighth and tenth grade) are quite small: two percentage points. The exception is the fourth grade rate, which increased nine percentage points (from 48 to 57, in the upper right hand of the table). This large spike of course drove much of the overall change.

Notice, however, that the percentage of those same fourth graders meeting the 3.0 threshold only increased two percentage points (from 81 to 83). Moreover, the overall change in the 3.0-based rate -- the increase combined across all three grades -- was only one percentage point, from 81 to 82 (essentially flat). In other words, the "significant gains" touted by the FLDOE are heavily concentrated in a single grade, and, more importantly, they basically disappear if one uses the previous benchmark of 3.0 instead of the current 3.5.

This illustrates a very important point about changes in these kinds of cutpoint-based rates, including proficiency rates, one that we’ve discussed here many times before: Trends in rates depend a great deal on where you set the cut score, and where students are “located” vis-à-vis that threshold.

If you use the 3.5 threshold, there was a moderate increase. But the rate using the 3.0 cut score, which was considered "passing" prior to 2012, was basically flat. It's the same exact data, but with a different line comes a rather different conclusion.

Now, let's take a quick look at the average scores (in the leftmost columns of the table). By themselves, these data suggest that there was a increase (+0.1 points) in the performance of the "typical" fourth and tenth grade test taker over their counterparts from last year. However, the changes overall, including the flat score among eight graders, may be too small to warrant strong conclusions under any circumstances, and definitely require cautious interpretation in this instance, given the extension of time students were given to complete the exam this year.

Moreover, looking at the average scores in tandem with the rates, we find similar (and related) sorts of inconsistencies as we do when comparing rates using different thresholds. Most notably, the average score was flat in eighth grade (3.3), but the 3.5-based passing rate increased two percentage points, and one percentage point using the 3.0 cutoff. So, among eighth graders, there was really no discernible change at all in the performance of the “typical student," but the pass rates suggest otherwise.

Finally, note how the fourth and tenth grade average scores both increased 0.1 points, but this corresponded with very different changes in the percentage of students scoring 3.5 or above – nine percentage points and two percentage points, respectively.

These kinds of inconsistencies occur all the time, and they can be quite misleading when cutpoint-based rates are considered in isolation, as is usually the case.**

(Side note: You'll find similar "anomalies" in the 2013 math and reading results for third graders, which was the only grade released for these subjects. The average math score decreased by one point, but the proficiency rate stayed flat. Conversely, the average score in third grade reading was flat, and the proficiency rate increased one point. Yet, echoing the press release, several news stories reported that math and reading "scores" had increased, when in fact neither had.)

To be clear, despite their measurement issues, proficiency and other cutpoint-based rates are not without advantages, including the fact that they are easier to understand than actual scores and provide a goal at which schools and educators can aim.

Moreover, Florida’s testing results may receive a great deal more attention than those of most other states and districts, but the issues described above are not at all unique to Florida. Indeed, the state at least goes to the trouble of reporting the scores along with the rates, which is not the case in other places. For instance, the test results from the District of Columbia Public Schools (DCPS) receive national attention, but DCPS does not release actual scores, only rates.

That said, changes in average scores are preferable to rates for gauging the performance of the “typical student." This is because, unlike pass rates, they do not focus on just one point in the score distribution (i.e., the passing threshold). The FLDOE press release and media packet focus almost entirely on the rates, and therefore end up interpreting the results in an incomplete, potentially misleading manner (and, making things even worse, they call the rates “scores”).

*****

* This is in no small part because officials over the years have used the results to advocate for their policy preferences ( (I would argue inappropriately), but that's a different story.

** And remember that we normally cannot even assess changes in standard proficiency rates under different cut scores the way that we can here. Also keep in mind that these are state-level results, with a sample size of approximately 200,000 students in each grade. The problem is far more severe at the district- and school-levels, where smaller samples make it much more likely that these sorts of inconsistencies will arise.

Tony Bennett misusing data for political benefit?

Shocking!

NOTE TO READERS: The original version of this post included a sentence in which Florida Education Commissioner Tony Bennett was quoted as characterizing the 2013 FCAT Writing results as "incredible improvement."

The quote was taken from the following Orlando Sentinel article:

http://www.orlandosentinel.com/news/local/breakingnews/os-fcat-scores-2…

However, it seems that the article quotes Commmissioner Bennett slightly out of context. According to a NPR StateImpact story (http://stateimpact.npr.org/florida/2013/05/24/bennett-ready-to-drill-in…), Commissioner Bennett was referring specifically to the FCAT Writing results among fourth graders, rather than overall, as implied in the Orlando Sentinel article (and this post).

The sentence containing the quote has therefore been deleted, with apologies.

MD

When reporters, news agencies, and the like receive these "media packets," why do journalists and reporters actually investigate and educate themselves on this before they report it?? I don't get it, isn't that their job??